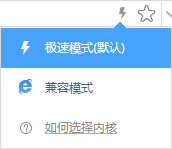

Nowadays, when OEMs launch new vehicle models, the number of on-board sensors like LiDAR, cameras and millimeter-wave radars are always the highlight, and the number can sometimes come to the daunting dozens.

How do we know whether these complex perception systems can accurately identify all types of obstacles? More precisely, how can OEMs verify the performance of the perception system in complex driving scenes?

The answer given by the mature automotive industry is “testing and evaluation”.

01

Human labor too inefficient? Evaluation will use AI instead

Nowadays, a torturing headache faced by the OEMs is: traditional testing and evaluation methods can hardly catch up with the soaring demand of intelligent vehicle development.

The perception system of intelligent vehicles is a software and hardware integrated system based on AI and big data. Traditional testing methods in man-made scenes do not cover every Coner Case in real-world scenes, and the testing site rental cost is too high.

The development of ADAS/AD functionalities to be used in a new scene needs to be supported by tens of thousands or even hundreds of thousands of frames of ground truth data collected and labeled through real world road tests. Whereas, with the increasing demand for more powerful ADAS/AD functionalities, the demand for road test ground truth data grows exponentially and is reaching PB level, which is "big data" level.

The situation is that for just 7 days of road test data, the data labeling work alone would require several persons to work continuously for a few months to complete. And if the traditional manual frame-to-frame labeling is employed, labeling errors due to misjudgment of the shape and size of obstacles is totally unmanageable.

Therefore, in labeling alone, the cost, efficiency and accuracy of traditional testing and evaluation methods are incapable of serving the multi-scene big data testing and evaluation needs of intelligent vehicles.

But, as you know, according to the V-model of automotive development processes, testing and development go hand-in-hand. Every key point in the development process must be fully evaluated and verified. In other words, fast and accurate verification is the key to accelerating product development, which is also why evaluation and verification must emphasize both efficiency and quality.

Since the perception system is an AI system and the testing and evaluation is for “big data", can we use AI to tackle the evaluation problem?

02

A thousand times more efficient than manual labeling, with rich and reliable truth values

So, how to use AI to solve the evaluation problem?

The answer given by RoboSense, a company with over ten years of experience in LiDAR perception technology, is the RS-Reference ground truth generation & evaluation solution.

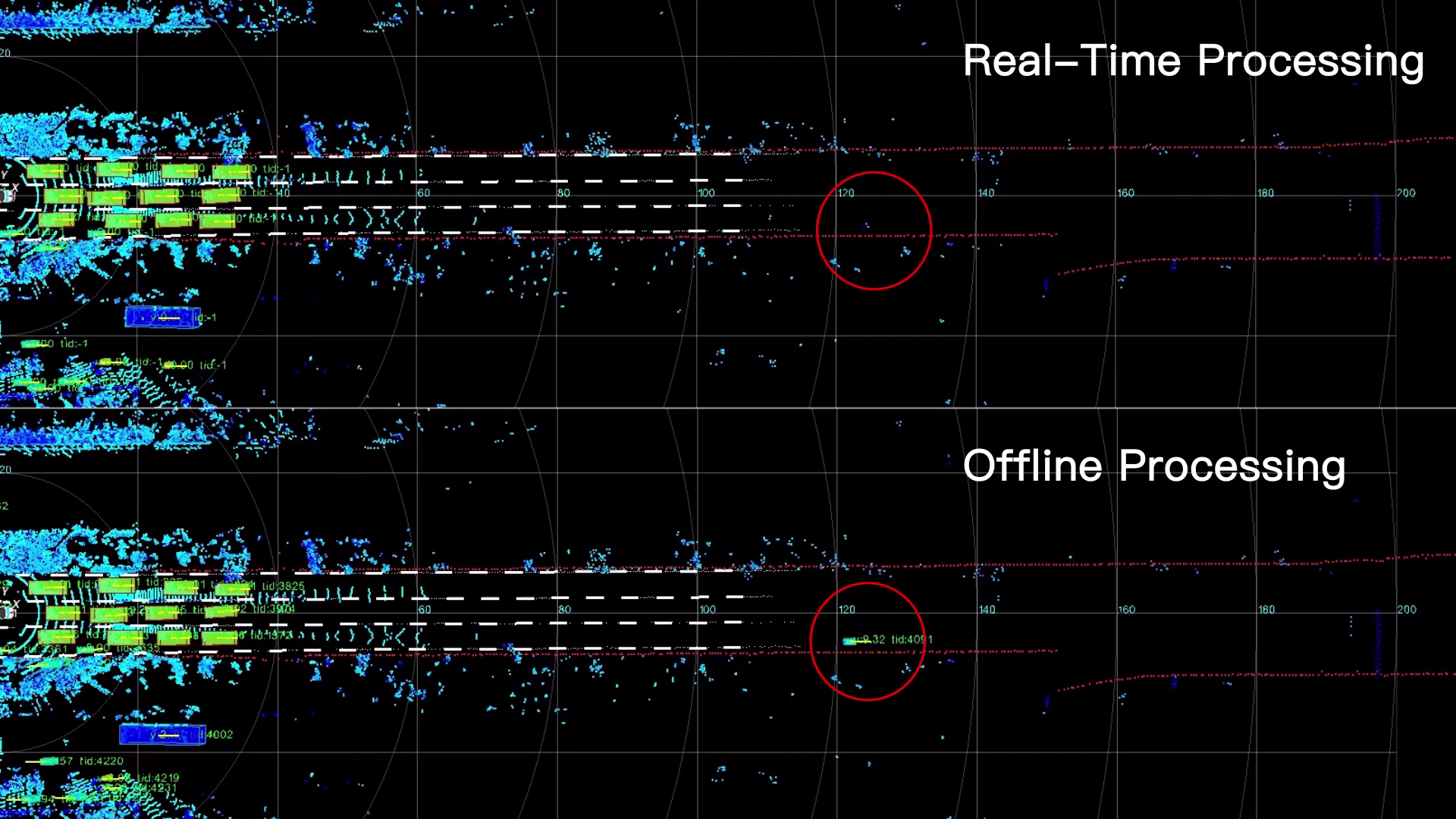

RS-Reference consists of two parts, a ground truth generation system and a perception evaluation system.

RS-Reference fully mobilizes AI technology to ensure the quality of real-world data. The truth generation system is a multi-sensor integrated data collection system plus high-performance offline AI perception software. The former assists in collecting raw data, and the latter generates truth data through intelligent calculation.

|

RS-Reference is of high-level design. Starting from the raw data collection system, it has adopted 128-beam LiDAR, blind spot LiDAR, long-range millimeter-wave radar, industrial camera, fiber-optic IMU, high-precision positioning RTK and the multi-sensor fusion solutions to effectively ensure the redundancy and accuracy of raw data.

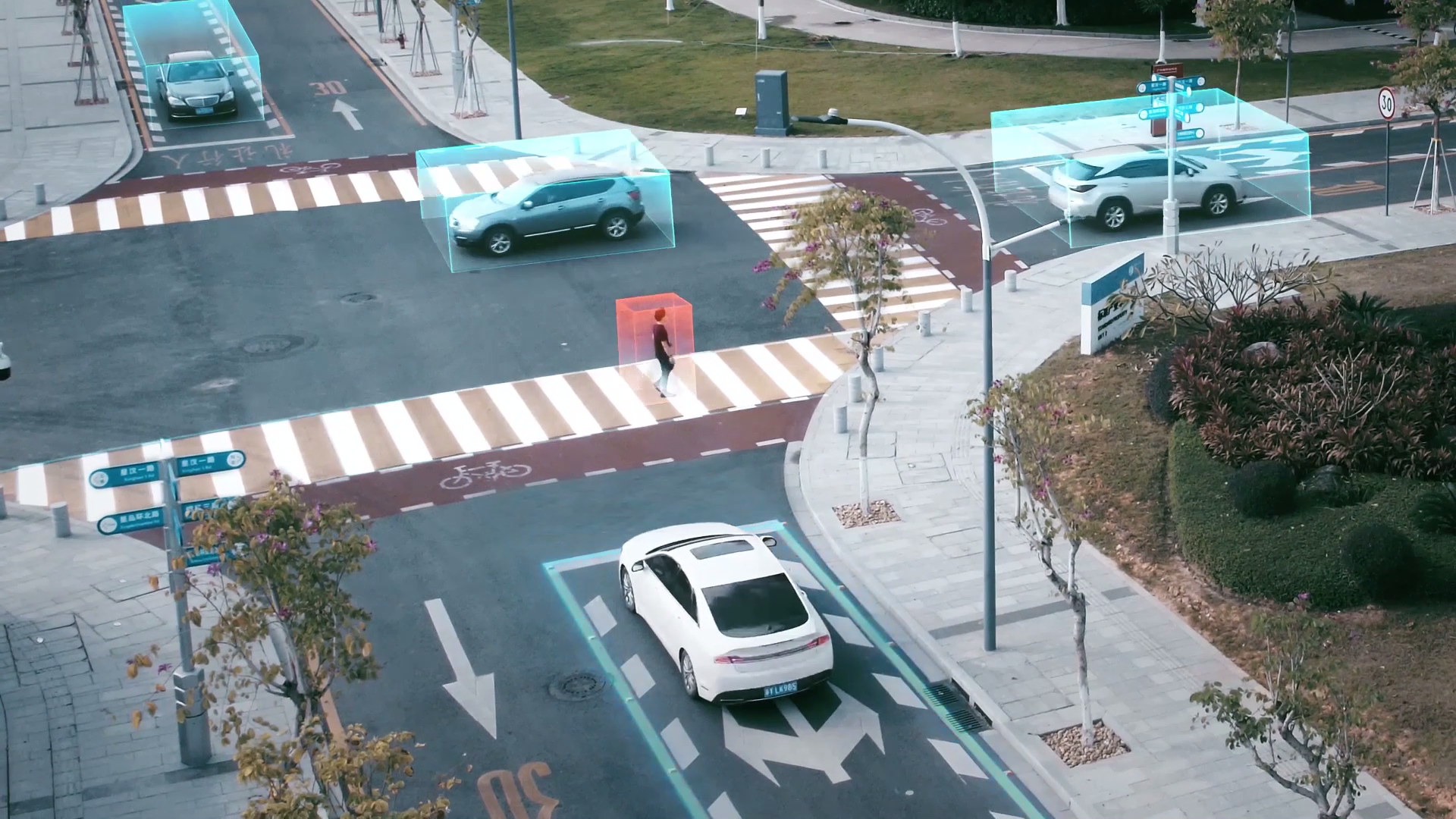

The high-performance offline AI perception software at the core of RS-Reference integrated RoboSense’s patented point cloud perception technology of over 10 years and advanced high-performance AI perception technology. It uses the offline processing mechanism to track and detect obstacles throughout their life cycles with excellent precision.

After the test vehicle, equipped with the data collection system, completes data collection, the truth generation software will start automatic data labeling to produce truth data thousands of times more efficiently than manual labeling.

Thanks to the advanced high-performance offline AI perception software, the truth data is accurate, reliable and rich in types, including both dynamic information such as the speed, position, size, category and ID of obstacles, and static information such as lane lines, road boundaries and roadway curvature.

03

Full-stack evaluation tool chain accelerates the development and verification cyclic iteration

The perception evaluation system of RS-Reference provides customized evaluation & a complete tool chain to meet varied customer needs.

RS-Reference has rich software functions including data management, data visualization, manual verification, perception KPI report generation and so on. It also provides evaluation tools for cameras, LiDAR, millimeter-wave radars and other sensors, and supports customized sensor evaluation to meet the evaluation needs for various models of sensors of OEMs, Tier 1 and so on.

RS-Reference’s testing and verification program also covers data cleaning, data alignment, data association, truth value matching, etc. It even engages the cyclic verification processes of program optimization and adjustment, greatly improving the development efficiency of on-board perception system software.

After obtaining the evaluation report with the assistance of RS-Reference, engineers will upgrade and iterate the solution to fix loopholes in the perception system, and then evaluate again. This cycle goes on until an ideal perception solution is reached, to guarantee the safety of intelligent vehicles with top performance.

04

Empowering upstream and downstream enterprises of the ADAS/AD industry - the ground truth and evaluation system boasts great potential

From the perspective of collaborative development of upstream and downstream of the industry, the intelligent ground truth and evaluation system solves the pain points of automakers, ADAS solution providers, third-party evaluation institutions and other players well, thus improving the development efficiency and add up business value.

For OEMs, due to the scarcity of the Corner Case, autonomous vehicles need hundreds of millions or even hundreds of billions of kilometers of test mileage to be effectively verified. With the RS-Reference ground truth and perception evaluation system, OEMs and ADAS system providers can mobilize the scene database data as much as possible from early stages of project development, effectively optimize and improve the safety performance of the autonomous driving systems.

Meanwhile, OEMs can verify suppliers’ sensor or perception system solutions through RS-Reference to screen and select suppliers faster.

For ADAS system providers, RS-Reference can not only improve the development efficiency of camera or millimeter-wave radar perception solutions, but also serve as a third-party tool to objectively verify the performance of supplier’s own perception solutions, thus winning more recognition and orders from OEM customers.

For third-party testing organizations, they can directly provide evaluation services for perception systems based on RS-Reference.

It is known that this RS-Reference system of RoboSense has been in the market for more than 4 years and has served tens of global OEM and Tier 1 customers in a large number of test fleets.

Based on its rich experience, RoboSense, together with China Intelligent and Connected Vehicles (Beijing) Research Institute Co., Ltd., Beijing Huawei Digital Technologies Co., Ltd. and National Automobile Quality Supervision and Test Center (Xiangfan), jointly developed the Requirements and Methods for LiDAR Perception Evaluation of Intelligent and Connected Vehicles and put forward a complete set of evaluation requirements, methods and indicator system to provide standards and guidelines for the testing and evaluation of LiDAR based on-board perception systems.

In the near future, the application of the intelligent ground truth and perception evaluation system will become a recognized practice across the industry that guards the safety of intelligent driving vehicles and as a model of close cooperation between human and AI.